Despite the enormous computers with processing power produced by computer manufacturers, today's computers in terms of speed and processing capacity are not enough to quench our thirst. As the science and technologies we produce become more complex, the processing power of today's "traditional" computers and processors (even "supercomputers") is not enough to process such huge data. For example , in order to complete operations such as comprehensive simulations of the Universe or the analysis of the evolution of a particular population in the last 250,000 years, the computers you use in your homes would have to run continuously for hundreds or even thousands of years! Even with supercomputers this kind of Analyzes can sometimes take up to several months. So as the amount of data we want to examine grows, even our gigantic supercomputers become calculators. Well, one day, will we be able to have computers with the processing power we need and want?

It is transistors and microprocessors that add speed and power to today's technology, getting smaller every year. Why downsizing means more speed and power, you might ask. The reason is very simple: While we used to be able to fit 100 processors in a certain space, we can now fit 100,000 processors in the same space. As the transistor produces its gate openings even smaller, there will be more of these processors that can fit in the same space.

But there is a problem... If, as Moore's Law predicts, the number of transistors on a microprocessor continues to double every 18 months, you can see microprocessor circuits measured at the atomic scale in 2020-2030. However, although the prediction of Moore's Law had worked correctly for the previous few decades, it is no longer valid as the size of the transistors we put in our processors has become too small and we are starting to encounter size barriers. Therefore, vehicles using only electric current can no longer suffice; instead, it is necessary to take advantage of the properties of subatomic particles that perform the memory and processing tasks of the computer.

Think of it this way: In 1970 the transistor gate opening was around 10 microns (1 micron is one millionth of a meter). By 1980 this size was reduced to 5 microns. 1 micron in 1990... 0.1 micron in 2000, ie 100 nanometers (1 nanometer is 1 billionth of 1 meter). To 50 nanometers at the beginning of 2009, to 32 nanometers at the end... 19 nanometers in 2012, 10 nanometers in 2014 and finally 7 nanometers on July 9, 2015 as a result of an investment of 3 billion dollars and 10 groundbreaking researches..

As you can imagine, each step requires more research, more money, more effort. However, we can "push poop" forward. So what's the end of it? Just after the nanometer comes the measure called Angstrom, the size of atoms is measured in this unit. These transistors are ultimately physical structures. Therefore, they are made up of atoms. If we want to produce parts with openings smaller than atomic size, this becomes impossible. Because it is necessary to go down to the subatomic level. We can no longer speak of atoms. In this case, we step into the world of subatomic particles. So to the quantum world!

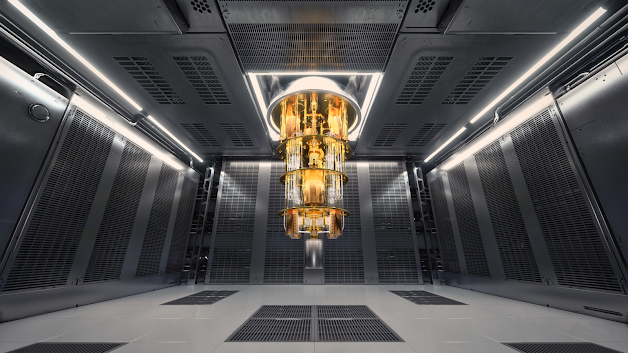

The invention of transistors revolutionized computers, making computers possible. The technology revolution made possible by quantum computing will open the door to breakthrough innovations of the next few decades by producing quantum computers. So what is quantum computing? How do quantum computers work? How is quantum engineering done?

What is the Working Principle of Quantum Computers?

Accordingly, the representation of something in the digital world is based on "being" or "not being". Quantum computers, on the other hand, process information over a "qubit" (quantum bit) to which a single atom corresponds. Superposition means that an atom can be in two or more states "at the same time". While normal bits usUnfortunately, superposition brings with it some technical problems (errors). Solving these errors in quantum systems is a problem in itself, because the sensitive structure of the subatomic world, which is open to interaction with any matter or radiation, causes the information that exists depending on an electron to be corrupted in any intervention.

However, in recent years, IBM researchers have overcome one of the obstacles to developing quantum computers that can be used in everyday life, by enabling the simultaneous resolution of two types of errors without disturbing the delicate structure of qubits. If more obstacles can be overcome, we will be able to make practical use of quantum computers in many areas.

Theory

So, in theory at least, quantum computers have the potential to perform operations much faster and harder than any silicon-based computer (the ones we use today). Today, scientists have already built basic quantum computers that can perform certain operations, but it will take many years for more practical quantum computers. e values of 1 or 0, thanks to superposition, qubits can simultaneously take values between 1 and 0 and between the two.

Example

Let's illustrate with an example how quantum computers, operating on the basis of this principle, which goes against our common sense, can exhibit magnificent processing power. Let's say you want to visit the cities in the Aegean Region in the most affordable way for you. For this, while you normally have to search for the most economical different routes, the most affordable ticket and hotel tariffs for a long time, a quantum computer will evaluate all these possibilities at the same time and find the cheapest route for you in the shortest time.

0 Comments